How to Run DeepSeek-R1 on Hyperstack

In this article

- About DeepSeek-R1 LLM

- Advantages of Deploying DeepSeek-R1 on Hyperstack

- How to Deploy DeepSeek-R1 on Hyperstack

- FAQs

About DeepSeek-R1 LLM

DeepSeek-R1 is a 671B parameter Mixture-of-Experts open-source language model with 37B activated per token and 128K context length. It delivers high performance through innovative training strategies, outperforming open-source models while remaining cost-efficient and scalable. DeepSeek-R1 combines Multi-head Latent Attention (MLA) with DeepSeekMoE to improve inference speed and training efficiency. It also introduced an auxiliary-loss-free load-balancing strategy and supports Multi-Token Prediction (MTP) for improved performance.

Key Features

- Advanced Reasoning Capabilities: Excels in logical inference, mathematical reasoning, and real-time problem-solving, outperforming other models in tasks requiring structured thinking.

- Reinforcement Learning Training: Employs reinforcement learning techniques, allowing it to develop advanced reasoning skills and generate logically sound responses.

- Open-Source: Released under the MIT license, DeepSeek-R1 is freely available for use, modification, and redistribution.

- Distilled Model Variants: Includes distilled versions with parameter counts ranging from 1.5B to 70B, catering to various computational requirements and use cases.

Advantageous of Deploying DeepSeek-R1 on Hyperstack

Hyperstack is a cloud platform designed to accelerate AI and machine learning workloads. Here's why it's an excellent choice for deploying DeepSeek-R1:

- Availability: Hyperstack provides access to the latest and most powerful GPUs such as the NVIDIA H100 on-demand, specifically designed to handle large language models.

- Ease of Deployment: With pre-configured environments and one-click deployments, setting up complex AI models becomes significantly simpler on our platform.

- Scalability: You can easily scale your resources up or down based on your computational needs.

- Cost-Effectiveness: You pay only for the resources you use with our cost-effective cloud GPU pricing.

- Integration Capabilities: Hyperstack provides easy integration with popular AI frameworks and tools.

How to Deploy DeepSeek-R1 on Hyperstack

Follow the step-by-step process below to deploy DeepSeek-R1 on Hyperstack.

Step 1: Deploying a Virtual Machine with DeepSeek-R1

-

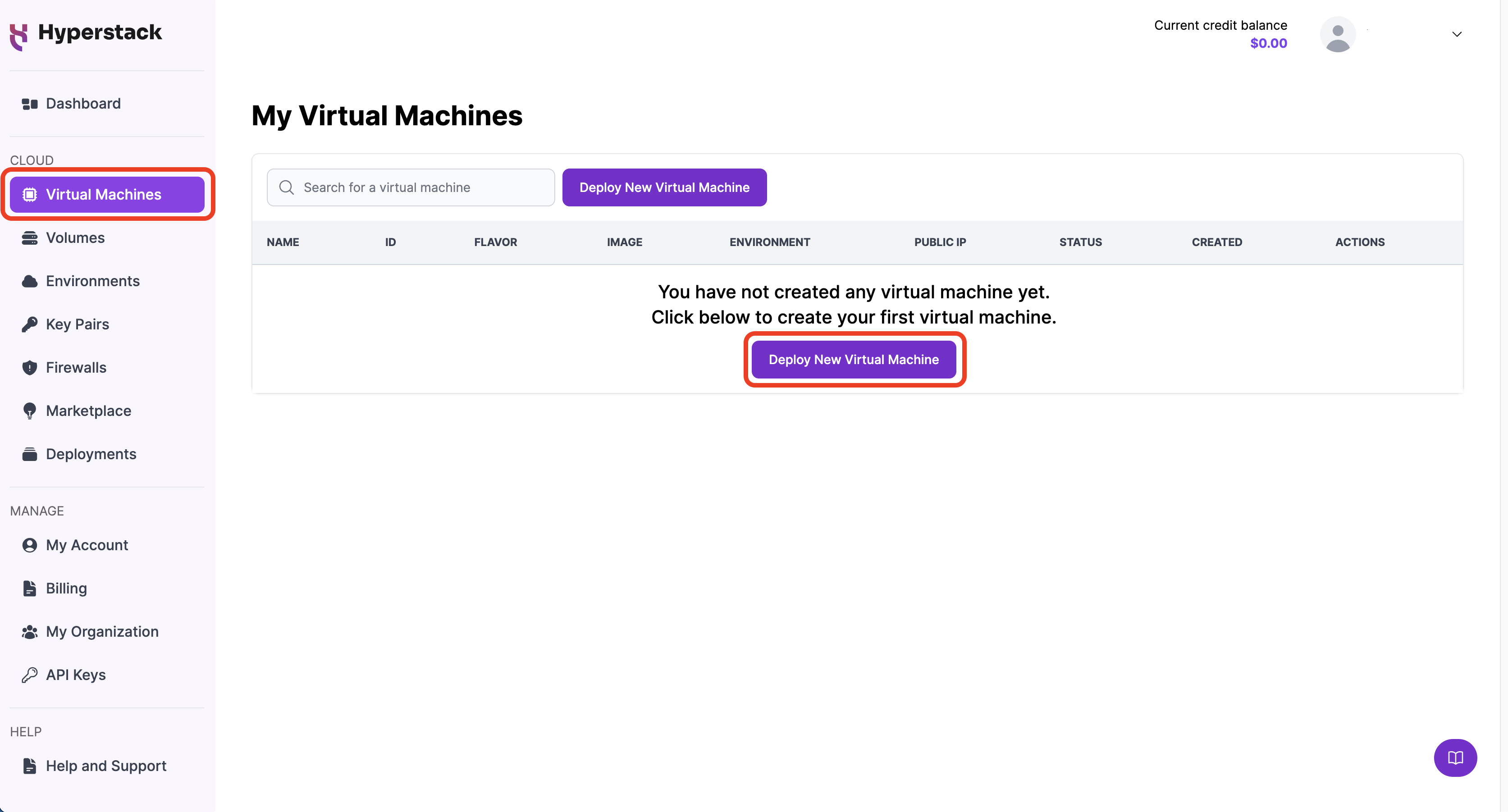

Start VM Deployment: Navigate to the "Virtual Machines" section in Hyperstack, and click "Deploy New Virtual Machine".

-

Choose Hardware Configuration: Select the "8xH100-SXM5" flavor. This configuration provides 8 NVIDIA H100 VM Instances, offering superior performance for advanced models like DeepSeek-R1.

-

Choose Environment: Select an environment where your virtual machine will be deployed. If this is your first time deploying a VM, see our Getting Started tutorial for creating one.

-

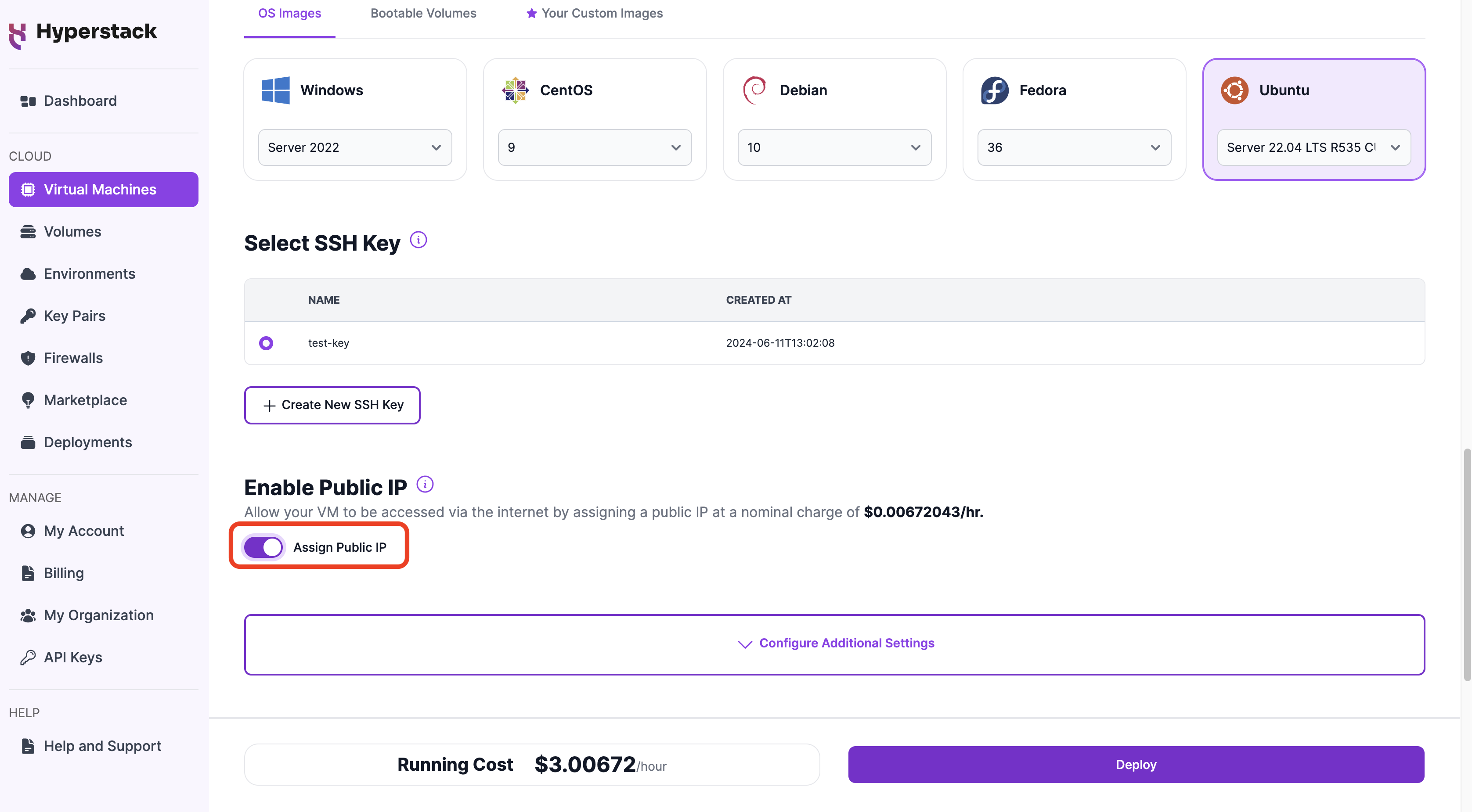

Select Image: Choose the "DeepSeek R1" operating system image.

-

Choose SSH Keypair: Choose one of the keypairs in your account. If you don't have a keypair yet, see our Getting Started tutorial for creating one.

-

Assign Public IP Address: Ensure you assign a Public IP to your Virtual Machine. This allows you to access your VM from the internet, which is crucial for remote management and API access.

-

SSH Configuration: Make sure to enable SSH connection. You'll need this to securely connect and manage your VM.

-

Finalize Deployment: Double-check all your settings and click the "Deploy" button to launch your virtual machine.

Step 2: Accessing Your VM

Once the initialization is complete, you can access your VM:

-

Obtain Public IP Address: In the Hyperstack dashboard, locate your VM's details to find the public IP address needed for SSH connection.

-

Connect via SSH

a. Open Terminal: On your local machine, open a terminal.

b. SSH Command: Use the following command replacing

usernameandip_addresswith your specific details.ssh -i [path_to_ssh_key] [os_username]@[vm_ip_address]e.g.

ssh -i /users/username/downloads/keypair_hyperstack ubuntu@0.0.0.0

Congratulations! You have successfully deployed and connected to your new virtual machine with DeepSeek-R1.

Step 3: Hibernating Your VM

When you're finished with your current workload, you can hibernate your VM to avoid incurring unnecessary costs. To learn more about hibernation and costs, click here.

To hibernate your VM, follow these steps:

- Locate VM: In the Hyperstack dashboard, find your Virtual Machine.

- Hibernate Option: Look for a "Hibernate" option and click it to stop billing for compute resources while preserving your setup.

FAQs

What is DeepSeek-R1?

DeepSeek-R1 is a 671B parameter, open-source Mixture-of-Experts language model designed for superior logical reasoning, mathematical problem-solving, and structured thinking tasks, offering high performance in complex inference and decision-making scenarios.

What are the key features of DeepSeek-R1?

DeepSeek-R1 offers advanced reasoning abilities, reinforcement learning-based training, and open-source accessibility under the MIT license. It includes distilled model variants from 1.5B to 70B parameters.

Are there smaller versions of DeepSeek-R1?

Yes, DeepSeek-R1 offers distilled model variants ranging from 1.5B to 70B parameters for different use cases.

How can I manage costs while using DeepSeek-R1 on Hyperstack?

You can use the "Hibernate" option on Hyperstack to pause your VM and reduce costs when not in use.

Can I increase the context size of DeepSeek-R1 on Hyperstack?

Yes, you can adjust the context size via the Open WebUI admin panel for longer input and output sequences.

How do I get started with Hyperstack to host DeepSeek-R1?

Sign up at https://console.hyperstack.cloud to get started with Hyperstack.

Which GPU is best for hosting DeepSeek-R1 on Hyperstack?

For hosting DeepSeek-R1 on Hyperstack, you can opt for the NVIDIA H100 GPU. Our NVIDIA H100 GPU is available for $3.00 per hour.